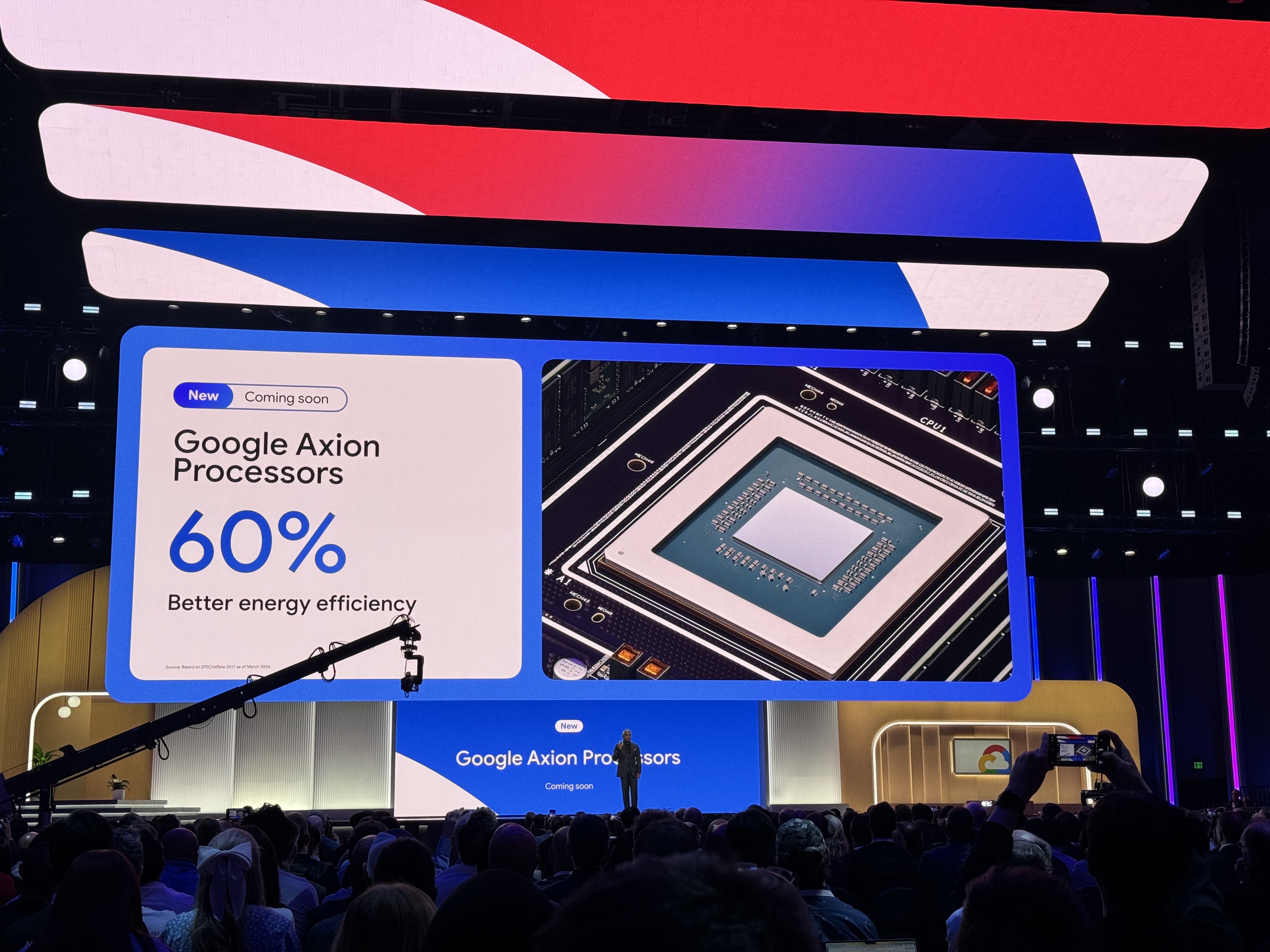

Google announces Axion, its first custom Arm-based data center processor

Google Cloud on Tuesday joined AWS and Azure in announcing its first custom-built Arm processor, dubbed Axion. Based on Arm’s Neoverse 2 designs, Google says its Axion instances offer 30% better performance than other Arm-based instances from competitors like AWS and Microsoft and up to 50% better performance and 60% better energy efficiency than comparable X86-based instances.

Google did not provide any documentation to back these claims up and, like us, you’d probably like to know more about these chips. We asked a lot of questions, but Google politely declined to provide any additional information. No availability dates, no pricing, no additional technical data. Those “benchmark” results? The company wouldn’t even say which X86 instance it was comparing Axion to.

“Technical documentation, including benchmarking and architecture details, will be available later this year,” Google spokesperson Amanda Lam said.

Maybe the chips aren’t even ready yet? After all, it took Google a while to announce Arm-chips in the cloud, especially considering that Google has long built its in-house TPU AI chips and, more recently, custom Arm-based mobile chips for its Pixel phones. AWS launched its Graviton chips back in 2018.

To be fair, though, Microsoft only announced its Cobalt Arm chips late last year, too, and those chips aren’t yet available to customers, either. But Microsoft Azure has offered instances based on Ampere’s Arm servers since 2022.

In a press briefing ahead of Tuesday’s announcement, Google stressed that since Axion is built on an open foundation, Google Cloud customers will be able to bring their existing Arm workloads to Google Cloud without any modifications. That’s really no surprise. Anything else would’ve been a very dumb move on Google Cloud’s part.

“We recently contributed to the SystemReady Virtual Environment, which is Arm’s hardware and firmware interoperability standard that ensures common operating systems and software packages can run seamlessly in ARM-based systems,” Mark Lohmeyer, Google Cloud’s VP for compute and AI/ML infrastructure, explained. “Through this collaboration, we’re accessing a broad ecosystem of cloud customers who have already deployed ARM-based workloads across hundreds of ISVs and open source projects.”

More later this year.