The goal of HP's radical The Machine: Reshaping computing around memory

Image: Peter Sayer

Image: Peter Sayer

Not every computer owner would be as pleased as Andrew Wheeler that their new machine could run “all weekend” without crashing.

But not everyone’s machine is “The Machine,” an attempt to redefine a relationship between memory and processor that has held since the earliest days of parallel computing.

Wheeler is a vice president and deputy labs director at Hewlett Packard Enterprise. He’s at the Cebit trade show in Hanover, Germany, to tell people about The Machine, a key part of which is on display in HPE’s booth.

Rather than have processors, surrounded by tiered RAM, flash and disks, communicating with one another to identify which of their neighbors has the freshest copy of the information they need, HPE’s goal with The Machine is to build a large pool of persistent memory that application processors can just access.

“We want all the warm and hot data to reside in a very large in-memory domain,” Wheeler said. “At the software level, we are trying to eliminate a lot of the shuffling of data in and out of storage.”

Removing that kind of overhead will accelerate the processing of enormous datasets that are becoming increasingly common in the fields of big data analytics and machine learning.

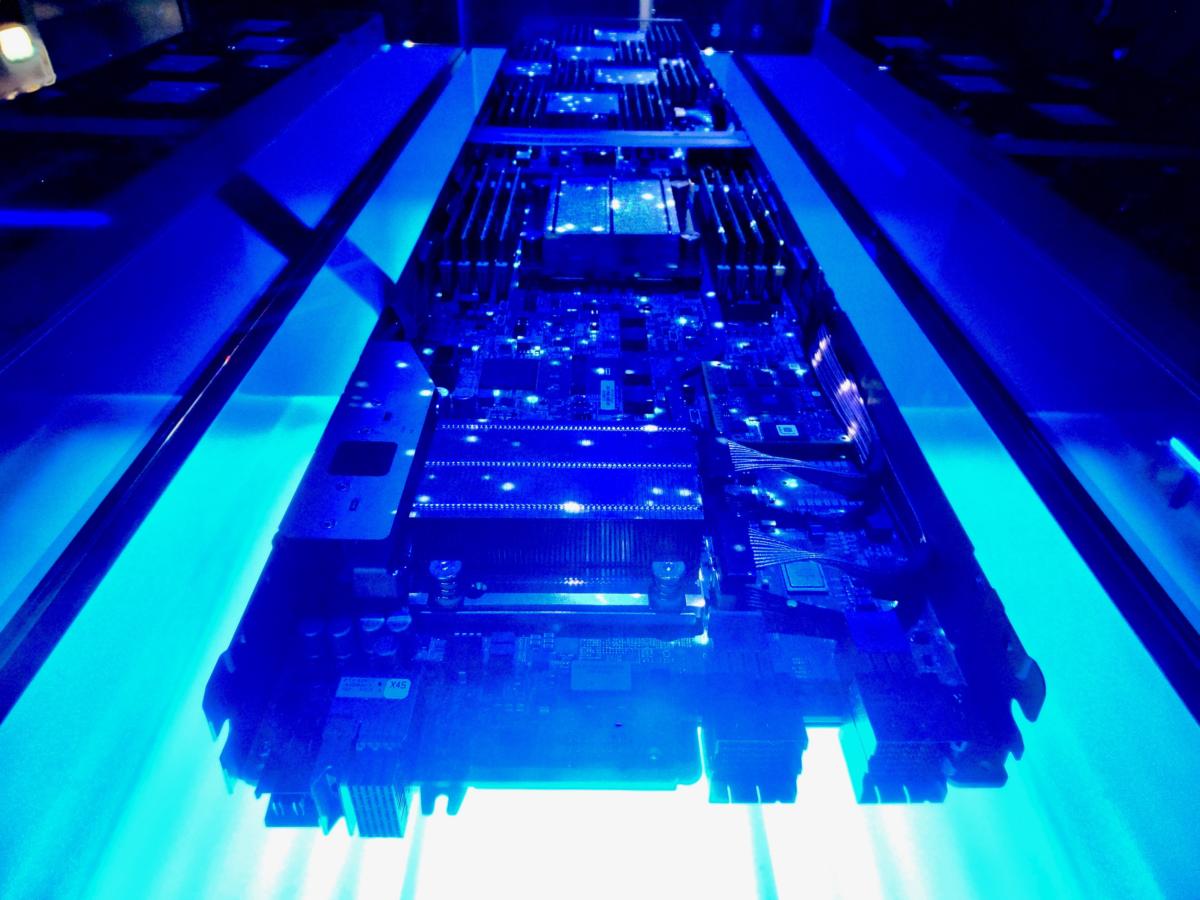

There’s a certain theater to HPE’s presentation of The Machine at Cebit.

In a darkened room at the center of its booth a glass case is lit from above by a blue glow and shimmering white spots. The glass case contains one of The Machine’s “node boards,” which combines memory, processing and optical interconnects.

Peter Sayer

Peter SayerHewlett Packard Enterprise displayed a node board from The Machine, its memory-centric computer testbed, at the Cebit trade show in March 2017. The ‘front’ of the board, furthest from the camera, contains the shared memory and the heatsinked FPGAs that handle the memory fabric. In the middle beneath another heatsink is the processor, surrounded by more memory. In the foreground is the ‘back’ of the board, holding the FPGAs that manage the optical interconnect to the server rack’s backplane.

The board is long — a little too long, in fact.

The node board’s designers based it on the 21 x 71 centimeter server trays used in HPE’s Apollo high-performance servers, but as they added more and more memory to the storage pool, they ran out of space — so they extended the board and tray by 15 centimeters or so, Wheeler said. The testbed boards project by that amount from the front of the server racks.

Four bulky heatsinks amid the rows of memory modules in the front half of the board mark the locations of the FPGAs (Field Programmable Gate Arrays) that handle the memory fabric, the logic that allows all the processors in the machine to access all the memory. Other FPGAs at the opposite end of the board handle the optical interconnects. Those will all shrink when the FPGAs are replaced by more compact ASICs (Application-Specific Integrated Circuits) later in the development process, Wheeler said.

For now, those FPGAs provide HPE with some important flexibility as the basis of the memory fabric is still changing.

HPE shared the lessons it had learned in designing The Machine’s memory fabric with other hardware manufacturers in the Gen-Z Consortium, which is working to develop an alternative to Intel’s proprietary memory fabric technologies.

“Our testbed allows us to incorporate more of Gen-Z’s features as it is defined,” said Wheeler. The goal will be to have full interoperability with other Gen-Z products when the specification is complete.

Gen-Z set up shop last October, a month or so before HPE booted The Machine for the first time.

Since then, said Wheeler, “We have gone from first boot to now operating at scale, a significant-sized system.”

How significant? The testbed now has 40 nodes and a memory pool of 160 terabytes. In comparison, HPE’s largest production server, the Superdome X, can hold up to 48 TB of RAM using the latest 128 GB DIMMs.

The company has seen a lot of demand for login time on The Machine — more than it can satisfy, for the moment.

However, there are other options for developers wanting to see how in-memory operation can accelerate processing of large data sets, including using a maxed-out Superdome X, or running their code in simulations of The Machine’s hardware.

HPE has tweaked the Linux operating system and other software to take advantage of The Machine’s unusual architecture, and released its changes under open source licenses, making it possible for others to simulate the performance of their applications in the new memory fabric.

The simulations obviously run much slower than the real thing. As HPE gains experience of operating The Machine, though, it has been able to calibrate its models and is more confident of the performance improvements forecast by the simulations.

Those forecasts suggest that The Machine will be able to reduce the time taken to model the risk inherent in a financial portfolio, say, from almost two hours to just a couple of seconds: a performance gain of three orders of magnitude.

At that speed, you could get a lot of work done over a weekend.