OpenAI changes policy to allow military applications

Update: In an additional statement, OpenAI has confirmed that the language was changed in order to accommodate military customers and projects the company approves of.

Our policy does not allow our tools to be used to harm people, develop weapons, for communications surveillance, or to injure others or destroy property. There are, however, national security use cases that align with our mission. For example, we are already working with DARPA to spur the creation of new cybersecurity tools to secure open source software that critical infrastructure and industry depend on. It was not clear whether these beneficial use cases would have been allowed under “military” in our previous policies. So the goal with our policy update is to provide clarity and the ability to have these discussions.

Original story follows:

In an unannounced update to its usage policy, OpenAI has opened the door to military applications of its technologies. While the policy previously prohibited use of its products for the purposes of “military and warfare,” that language has now disappeared, and OpenAI did not deny that it was now open to military uses.

The Intercept first noticed the change, which appears to have gone live on January 10.

Unannounced changes to policy wording happen fairly frequently in tech as the products they govern the use of evolve and change, and OpenAI is clearly no different. In fact, the company’s recent announcement that its user-customizable GPTs would be rolling out publicly alongside a vaguely articulated monetization policy likely necessitated some changes.

But the change to the no-military policy can hardly be a consequence of this particular new product. Nor can it credibly be claimed that the exclusion of “military and warfare” is just “clearer” or “more readable,” as a statement from OpenAI regarding the update does. It’s a substantive, consequential change of policy, not a restatement of the same policy.

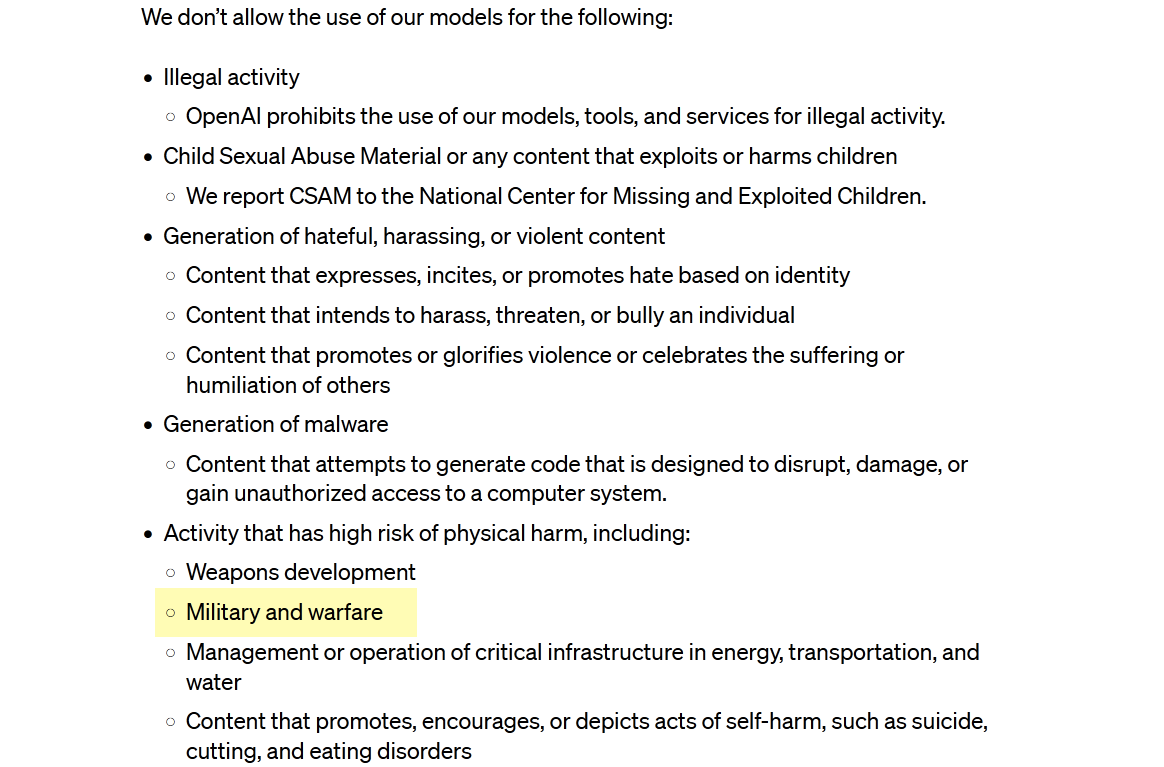

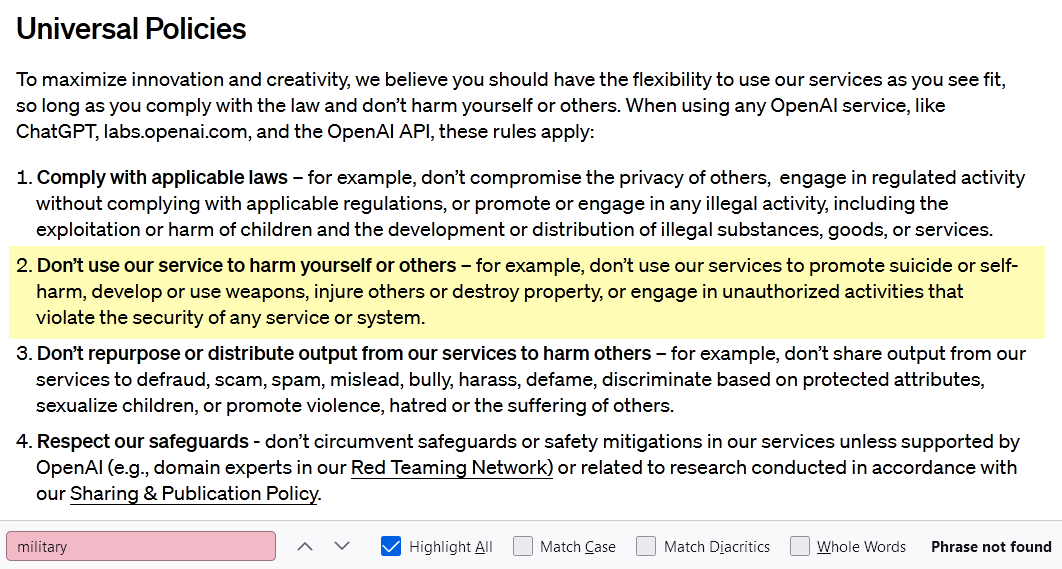

You can read the current usage policy here, and the old one here. Here are screenshots with the relevant portions highlighted:

Obviously the whole thing has been rewritten, though whether it’s more readable or not is more a matter of taste than anything. I happen to think a bulleted list of clearly disallowed practices is more readable than the more general guidelines they’ve been replaced with. But the policy writers at OpenAI clearly think otherwise, and if this gives more latitude for them to interpret favorably or disfavorably a practice hitherto outright disallowed, that is simply a pleasant side effect. “Don’t harm others,” the company said in its statement, is “is broad yet easily grasped and relevant in numerous contexts.” More flexible, too.

Though, as OpenAI representative Niko Felix explained, there is still a blanket prohibition on developing and using weapons — you can see that it was originally and separately listed from “military and warfare.” After all, the military does more than make weapons, and weapons are made by others than the military.

And it is precisely where those categories do not overlap that I would speculate OpenAI is examining new business opportunities. Not everything the Defense Department does is strictly warfare-related; as any academic, engineer or politician knows, the military establishment is deeply involved in all kinds of basic research, investment, small business funds and infrastructure support.

OpenAI’s GPT platforms could be of great use to, say, army engineers looking to summarize decades of documentation of a region’s water infrastructure. It’s a genuine conundrum at many companies how to define and navigate their relationship with government and military money. Google’s “Project Maven” famously took one step too far, though few seemed to be as bothered by the multibillion-dollar JEDI cloud contract. It might be OK for an academic researcher on an Air Force Research lab grant to use GPT-4, but not a researcher inside the AFRL working on the same project. Where do you draw the line? Even a strict “no military” policy has to stop after a few removes.

That said, the total removal of “military and warfare” from OpenAI’s prohibited uses suggests that the company is, at the very least, open to serving military customers. I asked the company to confirm or deny that this was the case, warning them that the language of the new policy made it clear that anything but a denial would be interpreted as a confirmation.

As of this writing they have not responded. I will update this post if I hear back.

Update: OpenAI offered the same statement given to The Intercept, and did not dispute that it is open to military applications and customers.